Stubsack: weekly thread for sneers not worth an entire post, week ending 5th October 2025 - awful.systems

gerikson @ gerikson @awful.systems 帖子 44评论 1,447加入于 2 yr. ago

gerikson @ gerikson @awful.systems 帖子 44评论 1,447加入于 2 yr. ago

gerikson @ gerikson @awful.systems

帖子

44

评论

1,447

加入于

2 yr. ago

Advent of Code Week 3 - you're lost in a maze of twisty mazes, all alike

Stubsack: weekly thread for sneers not worth an entire post, week ending Sunday 1 September 2024

Person who exercises her free association rights at conferences incites ire in Jameson Lopp

Butters do a 180 regarding statism as Daddy Trump promises to use filthy Fed FIAT to buy and hodl BTC

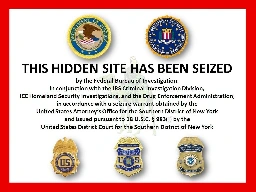

In an attempt to secure the libertarian vote, Trump promises to pardon Dread Pirate Roberts (while calling for the death penalty for other drug dealers)

Flood of AI-Generated Submissions ‘Final Straw’ for Small 22-Year-Old Publisher

Turns out that the basic mistakes spider runners fixed in the late 90s are arcane forgotten knowledge to our current "AI" overlords

Surprise level: zero