People really misunderstand what LLMs (Large Language Models) are. That last word is key: they're models. They take in reams of text from all across the web and make a model of what a conversation looks like (or what code looks like, etc.). When you ask it a question, it gives you a response that looks right based on what it took in.

Looking at how they do with math questions makes it click for a lot of people. You can ask an LLM for a mathematical proof, and it will give you one. If the equation you asked it about is commonly found online, it might be right because its database/model has that exact thing multiple times, so it can just regurgitate it. But if not, it's going to give you a proof that looks like the right kind of thing, but it's very unlikely to be correct. It doesn't understand math - it doesn't understand anything - it just uses it's model to give you something that looks like the right kind of response.

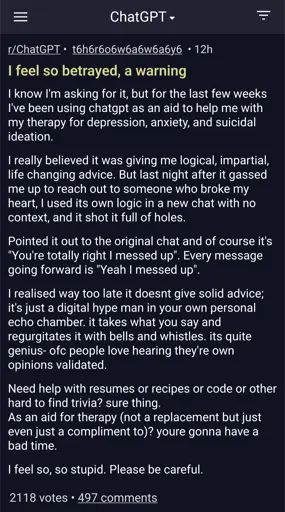

If you take the above paragraph and replace the math stuff with therapy stuff, it's exactly the same (except therapy is less exacting than math, so it's less clear that the answers are wrong).

Oh and since they don't actually understand anything (they're just software), they don't know if something is a joke unless it's labeled as one. So when a redditor made a joke about using glue in pizza sauce to help it stick to the pizza, and that comment got a giant amount of upvotes, the LLMs took that to mean that's a useful thing to incorporate into responses about making pizza, which is why that viral response happened.