Kubernetes

cross-posted from: https://lemmy.ml/post/20234044

> Do you know about using Kubernetes Debug containers? They're really useful for troubleshooting well-built, locked-down images that are running in your cluster. I was thinking it would be nice if k9s had this feature, and lo and behold, it has a plugin! I just had to add that snippet to my

${HOME}/.config/k9s/plugins.yaml, run k9s, find the pod, press enter to get into the pod's containers, select a container, and press Shift-D. The debug-container plugin uses the nicolaka/netshoot image, which has a bunch of useful tools on it. Easy debugging in k9s!- • 100%kubernetes.io Kubernetes v1.31: Accelerating Cluster Performance with Consistent Reads from Cache

Kubernetes is renowned for its robust orchestration of containerized applications, but as clusters grow, the demands on the control plane can become a bottleneck. A key challenge has been ensuring strongly consistent reads from the etcd datastore, requiring resource-intensive quorum reads. Today, th...

- • 100%kubernetes.io Kubernetes Removals and Major Changes In v1.31

As Kubernetes develops and matures, features may be deprecated, removed, or replaced with better ones for the project's overall health. This article outlines some planned changes for the Kubernetes v1.31 release that the release team feels you should be aware of for the continued maintenance of your...

- • 100%www.sobyte.net Will adding K8S CPU limit reduce service performance?

Explore whether adding the K8S CPU limit will degrade service performance.

- • 75%kubernetes.io Kubernetes 1.30: Preventing unauthorized volume mode conversion moves to GA

With the release of Kubernetes 1.30, the feature to prevent the modification of the volume mode of a PersistentVolumeClaim that was created from an existing VolumeSnapshot in a Kubernetes cluster, has moved to GA! The problem The Volume Mode of a PersistentVolumeClaim refers to whether the underlyin...

- • 100%kubernetes.io Kubernetes v1.30: Uwubernetes

Editors: Amit Dsouza, Frederick Kautz, Kristin Martin, Abigail McCarthy, Natali Vlatko Announcing the release of Kubernetes v1.30: Uwubernetes, the cutest release! Similar to previous releases, the release of Kubernetes v1.30 introduces new stable, beta, and alpha features. The consistent delivery o...

- • 100%kubernetes.io A Peek at Kubernetes v1.30

Authors: Amit Dsouza, Frederick Kautz, Kristin Martin, Abigail McCarthy, Natali Vlatko A quick look: exciting changes in Kubernetes v1.30 It's a new year and a new Kubernetes release. We're halfway through the release cycle and have quite a few interesting and exciting enhancements coming in v1.30. ...

I recently got recommended this project, to have a more natively connected CI/CD (I would probably be more interested in the CI part, as I already have argo-cd running) And it seems very interesting, and the development seems okayish active. The only thing that I am curious about (and why I made this post, besides maybe making more people aware that it exists), is how active the Tekton hub (https://hub.tekton.dev/) is.

So, maybe somebody here has some information on that. I am not using Tekton (yet), but I read somewhere in the documentation, that this hub is supposed to be the place to get re-usable components, but seeing the actual activity on there turned me off from the project a little bit, because a lot of things are in version 0.1 and have been last updated 1 or 2 years ago. Maybe that issue only exists, because I am not logged in, but that certainly looks weird.

So, do you have any experience with Tekton? How do you feel about it?

- matduggan.com K8s Service Meshes: The Bill Comes Due

Kubernetes Service Meshes aren't free anymore, what teams need to know moving forward.

- • 100%www.jimangel.io A Practical Guide to Running NVIDIA GPUs on Kubernetes

Setup an NVIDIA RTX GPU on bare-metal Kubernetes, covering driver installation on Ubuntu 22.04, configuration, and troubleshooting.

- • 100%kubernetes.io Kubernetes v1.29: Mandala

Authors: Kubernetes v1.29 Release Team Editors: Carol Valencia, Kristin Martin, Abigail McCarthy, James Quigley Announcing the release of Kubernetes v1.29: Mandala (The Universe), the last release of 2023! Similar to previous releases, the release of Kubernetes v1.29 introduces new stable, beta, and...

- • 100%kubernetes.io Gateway API v1.0: GA Release

Authors: Shane Utt (Kong), Nick Young (Isovalent), Rob Scott (Google) On behalf of Kubernetes SIG Network, we are pleased to announce the v1.0 release of Gateway API! This release marks a huge milestone for this project. Several key APIs are graduating to GA (generally available), while other signif...

- • 80%kubernetes.io Kubernetes Removals, Deprecations, and Major Changes in Kubernetes 1.29

Authors: Carol Valencia, Kristin Martin, Abigail McCarthy, James Quigley, Hosam Kamel As with every release, Kubernetes v1.29 will introduce feature deprecations and removals. Our continued ability to produce high-quality releases is a testament to our robust development cycle and healthy community....

One of biggest problems of #kubernetes is complexity. @thockin on #KubeCon keynote shares his insights. I've seen that time and again with my users, as well as on our Logz.io DevOps Pulse yearly survey. Maintainers aren't the end users of @kubernetes , which doesn't help.

\#KubeCon #ObservabilityDay? It’s time to talk about the unspoken challenges of [#monitoring](https://fosstodon.org

\#KubeCon #ObservabilityDay? It’s time to talk about the unspoken challenges of #monitoring #Kubernetes: the bloat of metric data, the high churn rate of pod metrics, configuration complexity, and so much more. https://horovits.medium.com/f30c58722541 \#observability #devops #SRE @kubernetes @linuxfoundation

- chrisnicola.de Kubernetes, JWT, Istio, and other fun stuff

I recently ran into a problem that involved a complete overhaul of the application authorization mechanism within a Kubernetes cluster. Here's what I came up with.

It’s time to talk about the unspoken challenges of monitoring #Kubernetes: the bloat of metric data, the high churn rate of pod metrics, configuration complexi

It’s time to talk about the unspoken challenges of monitoring #Kubernetes: the bloat of metric data, the high churn rate of pod metrics, configuration complexity, and so much more. https://horovits.medium.com/f30c58722541 \#kubecon @kubernetes #k8s #monitoring #observability #devops #SRE @victoriametrics

cross-posted from: https://lemmy.zip/post/3942293

> We need to deploy a Kubernetes cluster at v1.27. We need that version because it comes with a particular feature gate that we need and it was moved to beta and set enabled by default from that version. > > Is there any way to check which feature gates are enabled/disabled in a particular GKE and EKS cluster version without having to check the kubelet configuration inside a deployed cluster node? I don't want to deploy a cluster just to check this. > > I've check both GKE and EKS changelogs and docs, but I couldn't see a list of enabled/disabled feature gates list. > > Thanks in advance!

I installed K3s for some hobby projects over the weekend and, so far, I have been very impressed with it.

This got me thinking, that it could be a nice cheap alternative to setting up an EKS cluster on AWS -- something I found to be both expensive and painful for the availability that we needed.

Is anybody using K3s in production? Is it OK under load? How have upgrades and compatibility been?

- github.com GitHub - minio/operator: Simple Kubernetes Operator for MinIO clusters :computer:

Simple Kubernetes Operator for MinIO clusters :computer: - GitHub - minio/operator: Simple Kubernetes Operator for MinIO clusters :computer:

Is anyone using the minio-operator? I'm hesitant because I can't find a lot of documentation on how to recover from cluster outages or partial disk failures.

- github.com GitHub - emberstack/kubernetes-reflector: Custom Kubernetes controller that can be used to replicate secrets, configmaps and certificates.

Custom Kubernetes controller that can be used to replicate secrets, configmaps and certificates. - GitHub - emberstack/kubernetes-reflector: Custom Kubernetes controller that can be used to replica...

I was wondering how I could use a wildcard lets encrypt certificate with different Ingresses in different namespaces and found this at the cert manager documentation. Quite easy to setup, just add some annotations and the certificate (and any other secret or configmap) will be automatically reflected to given namespaces.

- • 100%buoyant.io Kubernetes 1.28: Revenge of the Sidecars?

If you're using Kubernetes, you've probably heard the term "sidecar" by now. What might surprise you, however, is that Kubernetes itself has no built-in notion of sidecars—at least, until now.

- github.com GitHub - kubecost/kubectl-cost: CLI for determining the cost of Kubernetes workloads

CLI for determining the cost of Kubernetes workloads - GitHub - kubecost/kubectl-cost: CLI for determining the cost of Kubernetes workloads

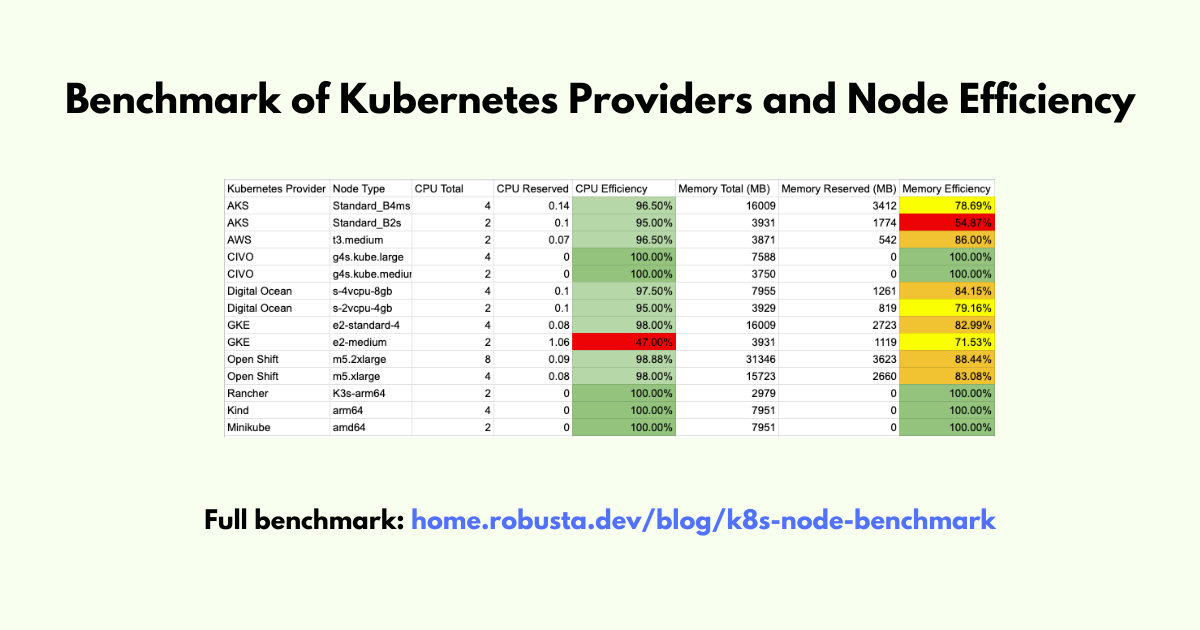

- home.robusta.dev When Is a CPU Not a CPU? Benchmark of Kubernetes Providers and Node Efficiency. | Robusta

How much overhead do Kubernetes providers take from each node?

- • 100%

uses eBPF to probe performance counters and other system stats, use ML models to estimate workload

github.com GitHub - sustainable-computing-io/kepler: Kepler (Kubernetes-based Efficient Power Level Exporter) uses eBPF to probe performance counters and other system stats, use ML models to estimate workload energy consumption based on these stats, and exports them as Prometheus metricsKepler (Kubernetes-based Efficient Power Level Exporter) uses eBPF to probe performance counters and other system stats, use ML models to estimate workload energy consumption based on these stats, ...

- • 100%github.com GitHub - collabnix/kubezilla500: Building a largest Kubernetes Community Cluster

Building a largest Kubernetes Community Cluster. Contribute to collabnix/kubezilla500 development by creating an account on GitHub.

And interesting project to have a look.

Gorilla-CLI converts NLP into commands. No OpenAI keys needed!

https://github.com/gorilla-llm/gorilla-cli

Today, I wanted to patch my nodelocaldns daemon set to not run on Fargate nodes. Of course I don’t remember the schema for patching with specific instructions. So, I asked Gorilla

$ gorilla show me how to patch a daemonset using kubectl to add nodeaffinity that matches expression eks.amazonaws.com/compute-type notin FargateGorilla responded with:

kubectl -n kube-system patch daemonset node-local-dns --patch '{"spec": {"template": {"spec": {"affinity": {"nodeAffinity": {"requiredDuringSchedulingIgnoredDuringExecution": {"nodeSelectorTerms": [{"matchExpressions": [{"key": "eks.amazonaws.com/compute-type","operator": "NotIn","values": ["fargate"]}]}]}}}}}}'Close enough! It just missed a trailing '}'

Really impressed.

Look, I get it. Docker started the whole movement. But if you're an OSS software vender, do your users a solid: don't use Docker hub for image hosting. Between ghcr.io (GitHub), Quay, and others, there are plenty of free choices that don't have rate limits on users. Unless you want Docker to get subscription, FOSS projects should use places that don't rate linit

I'd love to hear some stories about how you or your organization is using Kubernetes for development! My team is experimenting with using it because our "platform" is getting into the territory of too large to run or manage on a single developer machine. We've previously used Docker Compose to enable starting things up locally, but that started getting complicated.

The approach we're trying now is to have a Helm chart to deploy the entire platform to a k8s namespace unique to each developer and then using Telepresence to connect a developer's laptop to the cluster and allow them to run specific services they're working on locally.

This seems to be working well, but now I'm finding myself concerned with resource utilization in the cluster as devs don't remember to uninstall or scale down their workloads when they're not active any more, leading to inflation of the cluster size.

Would love to hear some stories from others!

YouTube Video

Click to view this content.

Although it infantilizes k8s quite a bit, this video REALLY helped me when I started my cloud native journey

cross-posted from: https://lemmy.run/post/10475

> ## Testing Service Accounts in Kubernetes > > Service accounts in Kubernetes are used to provide a secure way for applications and services to authenticate and interact with the Kubernetes API. Testing service accounts ensures their functionality and security. In this guide, we will explore different methods to test service accounts in Kubernetes. > > ### 1. Verifying Service Account Existence > > To start testing service accounts, you first need to ensure they exist in your Kubernetes cluster. You can use the following command to list all the available service accounts: > >

bash > kubectl get serviceaccounts >> > Verify that the service account you want to test is present in the output. If it's missing, you may need to create it using a YAML manifest or thekubectl create serviceaccountcommand. > > ### 2. Checking Service Account Permissions > > After confirming the existence of the service account, the next step is to verify its permissions. Service accounts in Kubernetes are associated with roles or cluster roles, which define what resources and actions they can access. > > To check the permissions of a service account, you can use thekubectl auth can-icommand. For example, to check if a service account can create pods, run: > >bash > kubectl auth can-i create pods --as=system:serviceaccount:<namespace>:<service-account> >> > Replace<namespace>with the desired namespace and<service-account>with the name of the service account. > > ### 3. Testing Service Account Authentication > > Service accounts authenticate with the Kubernetes API using bearer tokens. To test service account authentication, you can manually retrieve the token associated with the service account and use it to authenticate requests. > > To get the token for a service account, run: > >bash > kubectl get secret <service-account-token-secret> -o jsonpath="{.data.token}" | base64 --decode >> > Replace<service-account-token-secret>with the actual name of the secret associated with the service account. This command decodes and outputs the service account token. > > You can then use the obtained token to authenticate requests to the Kubernetes API, for example, by including it in theAuthorizationheader using tools likecurlor writing a simple program. > > ### 4. Testing Service Account RBAC Policies > > Role-Based Access Control (RBAC) policies govern the access permissions for service accounts. It's crucial to test these policies to ensure service accounts have the appropriate level of access. > > One way to test RBAC policies is by creating a Pod that uses the service account you want to test and attempting to perform actions that the service account should or shouldn't be allowed to do. Observe the behavior and verify if the access is granted or denied as expected. > > ### 5. Automated Testing > > To streamline the testing process, you can create automated tests using testing frameworks and tools specific to Kubernetes. For example, the Kubernetes Test Framework (KTF) provides a set of libraries and utilities for writing tests for Kubernetes components, including service accounts. > > Using such frameworks allows you to write comprehensive test cases to validate service account behavior, permissions, and RBAC policies automatically. > > ### Conclusion > > Testing service accounts in Kubernetes ensures their proper functioning and adherence to security policies. By verifying service account existence, checking permissions, testing authentication, and validating RBAC policies, you can confidently use and rely on service accounts in your Kubernetes deployments. > > Remember, service accounts are a critical security component, so it's important to regularly test and review their configuration to prevent unauthorized access and potential security breaches.cross-posted from: https://lemmy.run/post/10206

> # Creating a Helm Chart for Kubernetes > > In this tutorial, we will learn how to create a Helm chart for deploying applications on Kubernetes. Helm is a package manager for Kubernetes that simplifies the deployment and management of applications. By using Helm charts, you can define and version your application deployments as reusable templates. > > ## Prerequisites > > Before we begin, make sure you have the following prerequisites installed: > > - Helm: Follow the official Helm documentation for installation instructions. > > ## Step 1: Initialize a Helm Chart > > To start creating a Helm chart, open a terminal and navigate to the directory where you want to create your chart. Then, run the following command: > >

shell > helm create my-chart >> > This will create a new directory namedmy-chartwith the basic structure of a Helm chart. > > ## Step 2: Customize the Chart > > Inside themy-chartdirectory, you will find several files and directories. The most important ones are: > > -Chart.yaml: This file contains metadata about the chart, such as its name, version, and dependencies. > -values.yaml: This file defines the default values for the configuration options used in the chart. > -templates/: This directory contains the template files for deploying Kubernetes resources. > > You can customize the chart by modifying these files and adding new ones as needed. For example, you can update theChart.yamlfile with your desired metadata and edit thevalues.yamlfile to set default configuration values. > > ## Step 3: Define Kubernetes Resources > > To deploy your application on Kubernetes, you need to define the necessary Kubernetes resources in thetemplates/directory. Helm uses the Go template language to generate Kubernetes manifests from these templates. > > For example, you can create adeployment.yamltemplate to define a Kubernetes Deployment: > >yaml > apiVersion: apps/v1 > kind: Deployment > metadata: > name: {{ .Release.Name }}-deployment > spec: > replicas: {{ .Values.replicaCount }} > template: > metadata: > labels: > app: {{ .Release.Name }} > spec: > containers: > - name: {{ .Release.Name }} > image: {{ .Values.image.repository }}:{{ .Values.image.tag }} > ports: > - containerPort: {{ .Values.containerPort }} >> > This template uses the values defined invalues.yamlto customize the Deployment's name, replica count, image, and container port. > > ## Step 4: Package and Install the Chart > > Once you have defined your Helm chart and customized the templates, you can package and install it on a Kubernetes cluster. To package the chart, run the following command: > >shell > helm package my-chart >> > This will create a.tgzfile containing the packaged chart. > > To install the chart on a Kubernetes cluster, use the following command: > >shell > helm install my-release my-chart-0.1.0.tgz >> > Replacemy-releasewith the desired release name andmy-chart-0.1.0.tgzwith the name of your packaged chart. > > ## Conclusion > > Congratulations! You have learned how to create a Helm chart for deploying applications on Kubernetes. By leveraging Helm's package management capabilities, you can simplify the deployment and management of your Kubernetes-based applications. > > Feel free to explore the Helm documentation for more advanced features and best practices. > > Happy charting!In March 2023, Argo CD completed a refactor of the release process in order to provide a SLSA Level 3 provenance for container images and CLI binaries. The CNCF also commissioned a security audit of Argo CD which was conducted by ChainGuard. The audit found that Argo CD achieved SLSA Level 3 v0.1 across the source, build, and provenance sections.

The Argo Project will next rollout attestations to Argo Rollouts, then follow with the remaining projects. SLSA has recently announced the SLSA Version 1.0 specifications, which Argo plans to embrace.