Very much smart people

Very much smart people

Very much smart people

It was the same with crypto TBH. It was a neat niche research interest until pyramid schemers with euphemisms for titles got involved.

With crypto, it was largely MLM scammers who started pumping it (futily, for the most part) until Ross Ulrich and the Silk Road leveraged it for black market sales.

Then Bitcoin, specifically, took off as a means of subverting bank regulations on financial transactions. This encouraged more big-ticket speculators to enter the market, leading to the JP Morgan sponsorship of Etherium (NFTs were a big part of this scam).

There's a whole historical pedigree to each major crypto offering. Solana, for instance, is tied up in Howard Lutnick's play at crypto through Cantor Fitzgerald.

Interesting.

I guess AI isn't so dissimilar, with major 'sects' having major billionaire/corporate backers, sometimes aiming for specific niches.

Anthropic was rather infamously funded by FTX. Deepseek came from a quant trading (and to my memory, crypto mining) firm, and there's loose evidence the Chinese govt is 'helping' all its firms with data (or that they're sharing it with each other under the table, somehow). Many say Zuckerberg open-sourced llama to 'poison the well' over OpenAI going closed.

Silk Road and other black market vendors existed well before the scams started. You could mail order drugs online when bitcoin was under $1, the first bubble pushed the price to $30 before crashing to sub-$1 again. THEN the scams and market manipulation took off.

Later people forked the project to create new chains in order to run rug pulls and other modern crypto scams.

Don't forget that the development of Ethereum was funded in large part by Peter Thiel

Hot take : Adding "Prompt expert" to a resume is like adding "professional Googler"

There used to be some skill involved in getting search engines to give you the right results, these days not so much but originally you did have to inject the right kind of search terms and a lot of people couldn't work that out.

Many years ago back before Google became so dominant I had a co-worker who could not get her head around the idea that you didn't in fact have to ask a search engine in the form of a question with a question mark on the end. It used to be somewhat of a skill.

This is actually very true. I did always object to knowing that Boolean operators work in Google coming to be called "Dorking." I amassed a sizeable MP3 collection in the early oughts thanks to searching ".mp3" and finding people's public folders filled with their CD rips. Just out there, freely hanging the internet wind.

These days SEO optimization has rendered Google itself borderline useless, and IIRC they removed some operators from use at some point. I have to use DDG, Brave and Leta searching Google if I want to find anything that's not just a URL for an obvious thing. And half the time none of that works anyway and I can't even find things I've found previously.

I'd trust the latter any day.

the latter just means IT expert

This image is clearly of my hands with an elastic band at the back of class two decades ago

Yeah but why am I arguing with them?

Maybe it's because they were stretching.

Wait till you talk to LinkedIn people interested in Quantum Physics

My favorite story was going on a date with a woman, who by rights was very bright. She had a PhD and went on and on about quantum this and that. We were heading to the live music stage and talking a long L-shaped gravel path... I chirped "shall we hypotenuse it across the feild?" She replied "what's a hypotenuse?"

The Venn diagram of LinkedIn people who post about Quantum Physics and those who post about Deepak Chopra is almost a circle.

Seems like one more person that I didn't need to know existed but now I do, thanks

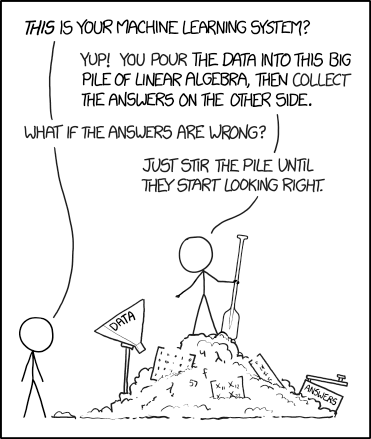

OK but what actually is this image?

Basic model of a neural net. The post is implying that you're arguing with bots.

https://en.wikipedia.org/wiki/Neural_network_(machine_learning)

Wouldn’t a bot recognize this though?

The post is implying that you’re arguing with bots.

Maybe, but it also might be suggesting that people are not fundamentally different.

Illustration of a neural network.

The simplest neural network (simplified). You input a set of properties(first column). Then you weightedly add all of them a number of times(with DIFFERENT weights)(first set of lines). Then you apply a non-linearity to it, e.g. 0 if negative, keep the same otherwise(not shown).

You repeat this with potentially different numbers of outputs any number of times.

Then do this again, but so that your number of outputs is the dimension of your desired output. E.g. 2 if you want the sum of the inputs and their product computed(which is a fun exercise!). You may want to skip the non-linearity here or do something special™

Simplest multilayer perceptron*.

A neural network can be made with only one hidden layer (and still, mathematically proven, be able to output any possible function result, just not as easily trained, and with a much higher number of neurons).

To elaborate: the dots are the simulated neurons, the lines the links between neurons. The pictured neural net has four inputs (on the left) leading to the first layer, where each neuron makes a decision based on the input it recieves and a predefined threshold, and then passes its answer on to the second layer, which then connects to the two outputs on the right

Multilayer perceptron

Logic.

Many player cat's cradle

Even I know what this is and I don't have a background in AI/ML.

isn't this the Trial of the Sekhemas in PoE2?

As a data scientist who also plays POE2, I laughed at this a lot longer than I should have

Probably bc they forgot the bias nodes

(/s but really I don't understand why no one ever includes them in these diagrams)

Same as if you’d ask a crypto bro how a blockchain actually works. All those self proclaimed Data Scientists who were able to use pytorch once successfully by following a tutorial, just don’t want to die.

Something to do with Large Language Models?

It's a neural network diagram

Oh

import tensorflow as tf

That particular network could never put up a good argument. At best, it might estimate, or predict numbers or 1-2 discrete binary states.

That's what it actually does, people are just this good at anthropomorphising

I've never had it well explained why there are (for example , in this case) two intermediary steps, and 6 blobs in each. That much has been a dark art, at least in the "intro to blah blah" blogposts.

Probably because there's no good reason.

At least one intermediate layer is needed to make it expressive enough to fit any data, but if you make it wide enough (increasing the blobs) you don't need more layers.

At that point you then start tuning it /adjusting the number of layers and how wide they are until it works well on data it's not seen before.

At the end, you're just like "huh I guess two hidden layers with a width of 6 was enough."

All seems pretty random, and not very scientific. Why not try 5 layers, or 50, 500? A million nodes? It's just a bit arbitrary.

Glorious.

Being the devil's advocate here.

Do you guys really understand all of your tools down to the technical level? People can make good use of AI/LLM without the need of understanding NN, weights and biases. The same way as I make good use of a microwave or a rangefinder without understanding the deep levels of electromagnetic waves and so on. Fun meme tho.

This is an extremely basic intro level ML topic. If you cannot even identify a fully connected network (or "MLP") then you don't know anything about the subject. You don't need to know how to hand compute a back prop iteration to know what this is.

This is like claiming to be working as an electrician and not knowing how electricity works.

Someone who uses AI to code or make images isn’t doing machine learning anymore than a pilot is doing aerospace engineering. And someone claiming to be an aerospace engineer can’t say that they don’t understand fluid dynamics.

If someone is claiming to be in the machine learning field, not recognizing a fundamental technique of machine learning is a dead giveaway that they’re lying. This kind of diagram is used in introductory courses for machine learning, anyone with any competence in the field would know what it was.

You're not a "microwave expert". Claiming to be one would imply that you do understand the inner workings. I write code in Java everyday for my job, but I wouldn't claim to be a "Java expert" because I don't have exceptionally deep knowledge of its inner workings.

Usage of a tool does not make someone an expert of a tool. An expert can describe, at least at a high-level, why the tool works the way it does.

Sure but if you make your living with microwaves somehow you should know what a magnetron is/be able to recognize one. You don't have to know exactly how it works but like... This is fundamental stuff.

To further the analogy, if you make your living cooking using a microwave, you better know how one works, how micro-waves propagate, how they interfere with each other (superposition), creating either constructive or deconstructive interference, creating hot and cold pockets, how they are generated and where they come from in a microwave, as well as ideally how heat works... And so on. Otherwise you're just gonna end up with mushy food that has hot and cold spots, and not know why or how to fix the problem.

If I'm claiming expertise (not just proficiency), then yes, I would make it a point to know my tools down to the technical level.

The majority of "AI Experts" online that I've seen are business majors.

Then a ton of junior/mid software engineers who have use the OpenAI API.

Finally are the very very few technical people who have interacted with models directly, maybe even trained some models. Coded directly against them. And even then I don't think many of them truly understand what's going on in there.

Hell, I've been training models and using ML directly for a decade and I barely know what's going on in there. Don't worry I get the image, just calling out how frighteningly few actually understand it, yet so many swear they know AI super well

That's just the thing about neural networks: Nobody actually understands what's going on there. We've put an abstraction layer over how we do things that we know we will never be able to pierce.

I'd argue we know exactly what's going on in there, we just don't necessarily, know for any particular model why it's going on in there.

Ding ding ding.

It all became basically magic, blind trial and error roughly ten years ago, with AlexNet.

After AlexNet, everything became increasingly more and more black box and opaque to even the actual PhD level people crafting and testing these things.

Since then, it has basically been 'throw all existing information of any kind at the model' to train it better, and then a bunch of basically slapdash optimization attempts which work for largely 'i dont know' reasons.

Meanwhile, we could be pouring even 1% of the money going toward LLMs snd convolutional network derived models... into other paradigms, such as maybe trying to actually emulate real brains and real neuronal networks... but nope, everyone is piling into basically one approach.

Thats not to say research on other paradigms is nonexistent, but it is barely existant in comparison.

I have a masters degree in statistics. This comment reminded me of a fellow statistics grad student that could not explain what a p-value was. I have no idea how he qualified for a graduate level statistics program without knowing what a p-value was, but he was there. I'm not saying I'm God's gift to statistics, but a p-value is a pretty basic concept in statistics.

Next semester, he was gone. Transferred to another school and changed to major in Artificial Intelligence.

I wonder how he's doing...

Feature Visualization How neural networks build up their understanding of images

https://distill.pub/2017/feature-visualization/

Yeah, I've trained a number of models (as part of actual CS research, before all of this LLM bullshit), and while I certainly understand the concepts behind training neural networks, I couldn't tell you the first thing about what a model I trained is doing. That's the whole thing about the black box approach.

Also why it's so absurd when "AI" gurus claim they "fixed" an issue in their model that resulted in output they didn't want.

No, no you didn't.

Love this because I completely agree. "We fixed it and it no longer does the bad thing". Uh no, incorrect, unless you literally went through your entire dataset and stripped out every single occurrence of the thing and retrained it, then no there is no way that you 100% "fixed" it

I once trained an AI in Matlab to spell my name.

I alternate between feeling so dumb because that is all that my model could do and feeling so smart because I actually understand the basics of what is happening with AI.

business majors are the worst i swear to god

They are literally what's causing the fall of our society.

Didn't you know? Being adept at business immediately makes you an expert in many science and engineering fields!

My wife is a business major.

I always tell her that the enemy is in my bed.

(I have no clue why she does not think that this is funny. ;))

I’ve given up attending AI conferences, events and meetups in my city for this exact reason. Show up for a talk called something like “Advances in AI” or “Inside AI” by a supposed guru from an AI company, get a 3 hour PowerPoint telling you to stop making PowerPoints by hand and start using ChatGPT to do it, concluding with a sales pitch for their 2-day course on how to get rich creating Kindle ebooks en masse

Even the dev oriented ones are painfully like this too. Why would you make your own when you subscribe to ours instead? Just sign away all of your data and call this API which will probably change in a month, you'll be so happy!

I have personally told coworkers that if they train a custom GPT, they should put "AI expert" on their resume as it's more than 99% of people have done - and 99% of those people didn't do anything more than tricked ChatGPT into doing something naughty once a year ago and now consider themselves "prompt engineers."

Absolutely agree there

Outside of low dimensional toy models, I don’t think we’re capable of understanding what’s happening. Even in academia, work on the ability to reliably understand trained networks is still in its infancy.

I remember studying "Probably Approximately Correct" learning and such, and it was a pretty cool way of building axioms, theorems, and proofs to bound and reason about ML models. To my knowledge, there isn't really anything like it for large networks; maybe someday.

Which is funny considering that Neural Networks have been a thing since the 90s.

NONE of them knows what's going on inside.

We are right back in the age of alchemy, where people talking latin and greek threw more or less things together to see what happens, all the while claiming to trying to make gold to keep the cash flowing.

And the number of us who build these models from scratch, from the ground up, even fewer.

I've been selling it even longer than that and I refuse to use the word expert.