If you think of complex numbers in their polar form, everything is much simpler. If you know basic calculus, it can be intuitive.

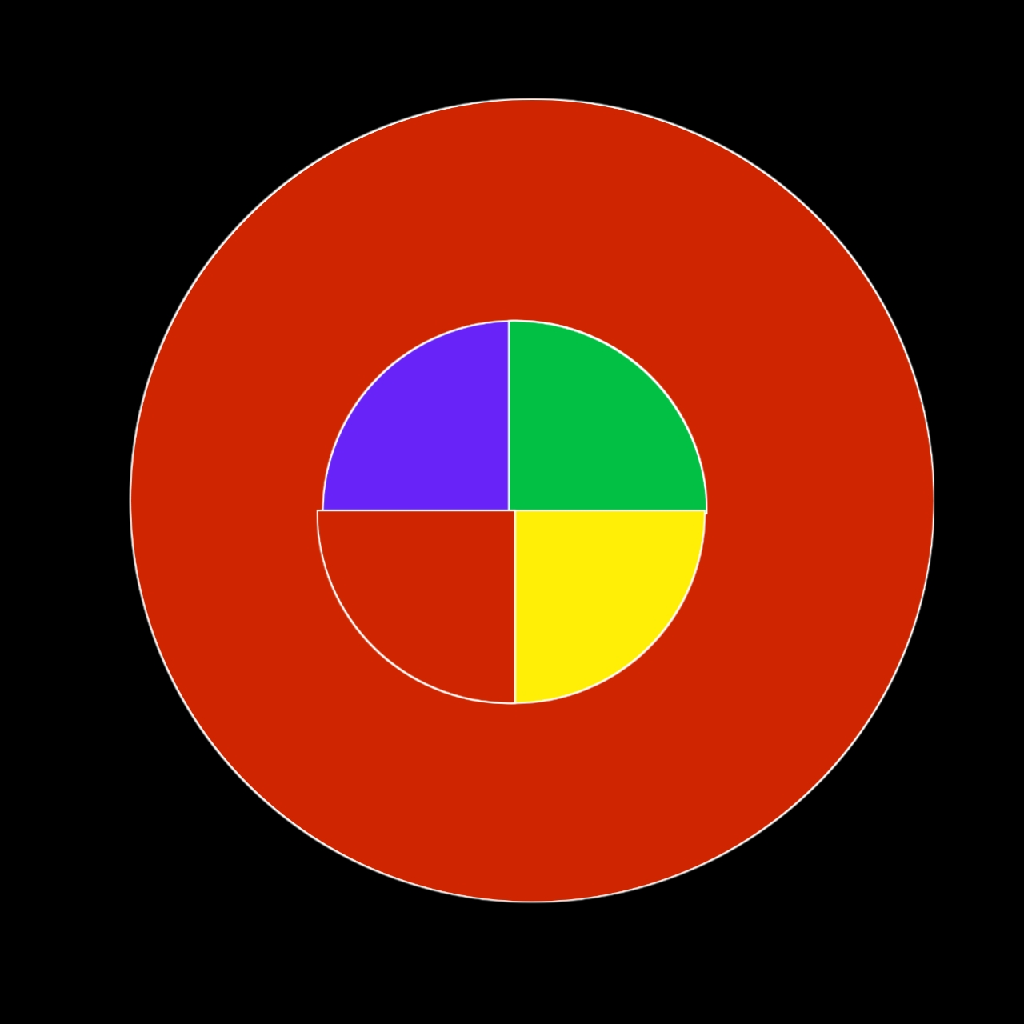

Instead of z = + iy, write z = (r, t) where r is the distance from the origin and t is the angle from the positive x-axis. Now addition is trickier to write, but multiplication is simple: (a,b) (c,d) = (ab, b + d). That is, the lengths multiply and the angles add. Multiplication by a number (1, t) simply adds t to the angle. That is, multiplying a point by (1, t) is the same as rotating it counterclockwise about the origin by an angle t.

The function f(t) = (1, t) then parameterizes a circular motion with a constant radial velocity t. The tangential velocity of a circular motion is perpendicular to the current position, and so the derivative of our function is a constant 90 degree multiple of itself. In radians, that means f'(t) = (1, pi/2)f(t). And now we have one of the simplest differential equations whose solution can only be f(t) = k e(t\* (1, pi/2)) = ke(it) for some k. Given f(0) = 1, we have k = 1.

All that said, we now know that f(t) = e(it) is a circular motion passing through f(0) = 1 with a rate of 1 radian per unit time, and e(i pi) is halfway through a full rotation, which is -1.

If you don't know calculus, then consider the relationship between exponentiation and multiplication. We learn that when you take an interest rate of a fixed annual percent r and compound it n times a year, as you compound more and more frequently (i.e. as n gets larger and larger), the formula turns from multiplication (P(1+r/n)(nt)) to exponentiation (Pe(rt)). Thus, exponentiation is like a continuous series of tiny multiplications. Since, geometrically speaking, multiplying by a complex number (z, z(2), z(3), ...) causes us to rotate by a fixed amount each time, then complex exponentiation by a continuous real variable (zt for t in [0,1]) causes us to rotate *continuously* over time. Now the precise nature of the numbers e and pi here might not be apparent, but that is the intuition behind why I say e(it) draws a circular motion, and hopefully it's believable that e^(i pi) = -1.

All explanations will tend to have an algebraic component (the exponential and the number e arise from an algebraic relationship in a fundamental geometric equation) and a geometric component (the number pi and its relationship to circles). The previous explanations are somewhat more geometric in nature. Here is a more algebraic one.

The real-valued function e(x) arises naturally in many contexts. It's natural to wonder if it can be extended to the complex plane, and how. To tackle this, we can fall back on a tool we often use to calculate values of smooth functions, which is the Taylor series. Knowing that the derivative of e(x) is itself immediately tells us that e(x) = 1 + x + x(2)/2! + x^(3)/3! + ..., and now can simply plug in a complex value for x and see what happens (although we don't yet know if the result is even well-defined.)

Let x = iy be a purely imaginary number, where y is a real number. Then substitution gives ex = e(iy) = 1 + iy + i(2)y(2)/2! + i(3)y(3)/3! + ..., and of course since i^(2) = -1, this can be simplified:

e(iy) = 1 + iy - y(2)/2! - iy(3)/3! + y(4)/4! + iy(5)/5! - y(6)/6! + ...

So we're alternating between real/imaginary and positive/negative. Let's factor it into a real and imaginary component: e^(iy) = a + bi, where

a = 1 - y(2)/2! + y(4)/4! - y^(6)/6! + ...

b = y - y(3)/3! + y(5)/5! - y^(7)/7! + ...

And here's the kicker: from our prolific experience with calculus of the real numbers, we instantly recognize these as the Taylor series a = cos(y) and b = sin(y), and thus conclude that if anything, e(iy) = a + bi = cos(y) + i sin(y). Finally, we have e(i pi) = cos(pi) + i sin(pi) = -1.

![\frac{1}{\varepsilon}\,{\mathrm{Im}}\left[ f(x+i\,\varepsilon) \right] = f'(x) + \mathcal{O}(\varepsilon^2)](https://lemmy.world/pictrs/image/a276b227-6e75-41a3-8c20-072ad1ca1846.png?format=webp&thumbnail=1024)